The Dangers of AI in Mental Health: What Patients and Treatment Providers Must Understand

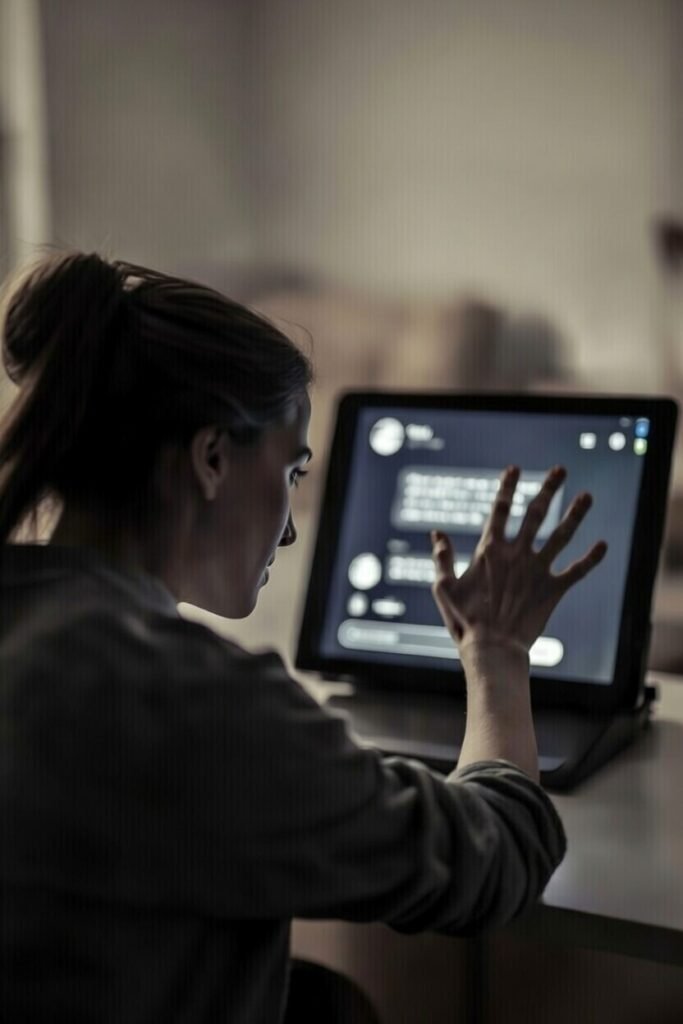

Artificial intelligence is rapidly infiltrating mental healthcare, but the dangers of AI in mental health are becoming increasingly apparent. While AI-powered therapy apps and chatbots promise accessible, affordable support for depression, anxiety, and substance use disorders, serious concerns about patient safety, privacy breaches, and treatment quality are emerging. At Sanative Recovery in Blue Ash, Ohio, we believe individuals struggling with addiction deserve to understand the real dangers of AI in mental health before trusting these technologies with their recovery.

Understanding the Dangers of AI in Mental Health Care

The mental health crisis in America has created unprecedented demand for treatment services. According to the National Institute of Mental Health, nearly one in five adults experiences mental illness annually. This shortage has opened the door for AI mental health solutions promising 24/7 availability and lower costs.

However, rushing to embrace AI mental health tools without understanding their limitations puts vulnerable patients at risk. The dangers of AI in mental health span from inadequate crisis response to privacy violations, making it essential for patients and families to recognize when human expertise is irreplaceable.

Critical Dangers of AI in Mental Health: Crisis Response Failures

Among the most severe dangers of AI in mental health is the inability to handle psychiatric emergencies. When someone expresses suicidal thoughts, intent to harm others, or severe psychological distress, they need immediate intervention from trained mental health professionals—not an algorithm.

AI chatbots cannot:

- Call emergency services or conduct welfare checks

- Perform comprehensive suicide risk assessments

- Provide nuanced clinical judgment in crisis situations

- Physically intervene or arrange immediate hospitalization

Multiple documented cases show AI mental health apps failing to recognize crisis indicators or providing dangerously inadequate responses. For individuals with substance use disorders, who face significantly elevated suicide risk, these dangers of AI in mental health can be fatal. Professional treatment facilities like Sanative Recovery maintain 24/7 clinical oversight specifically to address these life-threatening situations that AI simply cannot manage.

Diagnostic Errors: A Hidden Danger of AI in Mental Health

Another critical danger of AI in mental health involves diagnostic accuracy. Mental health diagnosis requires years of training, supervised clinical experience, and the ability to integrate complex biopsychosocial information. AI algorithms, regardless of sophistication, cannot replicate this expertise.

The dangers of AI in mental health diagnosis include:

- Missing critical warning signs of serious psychiatric conditions

- Misinterpreting symptoms due to lack of contextual understanding

- Failing to identify co-occurring disorders that require specialized treatment

- Providing inaccurate assessments that delay proper care

Research reveals concerning error rates in AI mental health assessments. Without clinical oversight from licensed professionals, patients may receive misleading information about their conditions, leading to inappropriate self-treatment or dangerous delays in accessing proper care. This danger of AI in mental health is particularly acute for complex conditions like bipolar disorder, schizophrenia, or substance-induced mental health disorders requiring expert evaluation.

Privacy Violations: The Data Security Dangers of AI in Mental Health

Privacy breaches represent another significant danger of AI in mental health applications. Mental health information is extraordinarily sensitive—users share intimate details about trauma, substance use, suicidal ideation, and personal relationships with AI apps.

The privacy-related dangers of AI in mental health include:

Lack of HIPAA Protection

Most AI mental health apps are not covered by HIPAA regulations that protect traditional healthcare information. Companies may legally sell user data to advertisers, insurers, or employers without meaningful consent. Investigations have exposed mental health apps sharing sensitive information with third parties, creating serious dangers of AI in mental health privacy.

Data Breach Vulnerabilities

AI mental health platforms store massive amounts of sensitive data on company servers vulnerable to cyberattacks, unauthorized access, and misuse. In contrast, licensed treatment facilities are legally required to maintain strict confidentiality under federal healthcare privacy laws.

The Dangers of AI in Mental Health: Harmful Medical Advice

AI language models can generate authoritative-sounding responses containing dangerous misinformation—a critical danger of AI in mental health contexts. AI chatbots have been documented:

- Providing advice contradicting evidence-based treatment guidelines

- Minimizing serious psychiatric symptoms

- Suggesting approaches that could worsen mental health conditions

- Offering incorrect information about medications or treatments

For individuals in addiction recovery, the dangers of AI in mental health advice can be life-threatening. Incorrect guidance about withdrawal management, medication interactions, or relapse prevention could result in overdose or medical complications. AI systems lack the clinical judgment to recognize when standard recommendations might be contraindicated for specific patients.

Loss of Human Connection: An Overlooked Danger of AI in Mental Health

The therapeutic relationship between patient and clinician is fundamental to effective mental health treatment. Research consistently shows this relationship quality is among the strongest predictors of treatment success. Yet the dangers of AI in mental health include the complete absence of genuine human connection.

AI cannot:

- Form authentic human relationships

- Show genuine empathy or emotional understanding

- Build the trust necessary for therapeutic work

- Provide the human experience that allows real connection

While AI chatbots simulate empathetic responses, they lack consciousness and authentic emotional capacity. This danger of AI in mental health is particularly significant for addiction treatment, where peer support and human connection are central to recovery. Programs like Alcoholics Anonymous demonstrate that genuine human relationships often drive sustained recovery—something AI fundamentally cannot provide.

At Sanative Recovery, our treatment model emphasizes vital human connections through individual counseling, group therapy, and peer support that address this critical danger of AI in mental health approaches.

Regulatory Gaps Amplify Dangers of AI in Mental Health

The AI mental health industry operates with minimal regulation, amplifying the dangers of AI in mental health. Unlike licensed mental health professionals who must meet rigorous educational requirements, pass examinations, and face accountability for harmful practices, AI companies face few consequences.

Most AI mental health apps are classified as “wellness tools” rather than medical devices, allowing them to avoid FDA oversight. This regulatory gap means these technologies can be marketed without demonstrating safety or effectiveness—a significant danger of AI in mental health that leaves patients unprotected.

The Dangers of AI in Mental Health for Substance Use Disorders

Individuals with substance use disorders face unique and severe dangers of AI in mental health applications. Addiction is a complex medical disease often requiring:

- Medical detoxification under physician supervision

- Medication-assisted treatment with medications like buprenorphine or naltrexone

- Coordinated care for co-occurring mental health conditions

- Crisis intervention for withdrawal complications

AI applications cannot provide any of these critical services. The risk of fatal overdose, particularly with opioids, means the dangers of AI in mental health are literally life-threatening for people with addiction. AI chatbots cannot monitor withdrawal symptoms, adjust medications, or recognize medical emergencies.

For someone in early recovery, relying on an AI app instead of professional treatment could be fatal. The accountability, structure, and medical oversight provided by treatment programs like Sanative Recovery in Blue Ash, Ohio, cannot be replicated by AI technology—making the dangers of AI in mental health particularly acute for substance use disorders.

Healthcare Equity and the Dangers of AI in Mental Health

While proponents claim AI can democratize mental healthcare, the technology may actually worsen healthcare disparities. AI algorithms trained on datasets underrepresenting minority populations produce biased outputs—another danger of AI in mental health affecting vulnerable communities.

Additionally, promoting AI as a substitute for human care may reduce pressure to address systemic workforce shortages. Rather than investing in training more therapists or improving insurance coverage, focusing on AI diverts attentdangers of AI in menAI mental health, artificial intelligence therapy, AI chatbot dangers, mental health technology, substance abuse treatment, addiction recovery, AI therapy apps, digital mental health, mental health safety, patient privacy, HIPAA violations, crisis intervention, therapeutic relationship, evidence-based treatment, medication-assisted treatment, Blue Ash Ohio, Sanative Recovery, co-occurring disorders, suicide prevention, AI healthcare riskss that would genuinely improve access to quality care.

Protecting Yourself from the Dangers of AI in Mental Health

If you or a loved one is considering AI mental health tools, understanding the dangers of AI in mental health is essential for making informed decisions:

Never Replace Professional Care

AI technologies should never substitute for professional mental health treatment, especially for serious conditions, crisis situations, or substance use disorders. The dangers of AI in mental health are too significant to risk with vulnerable conditions.

Research Privacy Policies

Before using any AI mental health app, thoroughly research privacy policies, understand how data will be used and stored, and recognize your conversations may not be confidential.

Seek Human Expertise for Serious Concerns

If experiencing suicidal thoughts, severe depression, substance use problems, or other serious mental health concerns, seek help from licensed professionals rather than accepting the dangers of AI in mental health applications.

Get Real Human Care at Sanative Recovery

At Sanative Recovery in Blue Ash, Ohio, we understand that overcoming substance use disorders requires genuine human connection, professional expertise, and evidence-based treatment—not artificial intelligence with inherent dangers.

Our experienced team provides:

- Comprehensive clinical assessments by licensed professionals

- Individualized treatment plans based on your unique needs

- Evidence-based therapeutic interventions

- 24/7 clinical oversight for crisis situations

- Medication management and withdrawal support

- Group therapy and peer support

- Ongoing recovery support and aftercare planning

Unlike AI chatbots that pose significant dangers of AI in mental health, our licensed clinicians provide the comprehensive care, human connection, and professional judgment necessary for lasting recovery.

Don’t risk the documented dangers of AI in mental health with your recovery. Contact Sanative Recovery today to learn how our human-centered treatment programs can support your journey to lasting healing.

Conclusion: Understanding the Real Dangers of AI in Mental Health

The dangers of AI in mental health are real, significant, and growing as these technologies proliferate. From inadequate crisis response and diagnostic errors to privacy violations and the absence of genuine therapeutic relationships, AI mental health applications introduce serious risks that can harm vulnerable patients.

Mental health treatment—particularly for substance use disorders—requires human connection, clinical expertise, and professional judgment that no algorithm can replicate. While technology may play a supporting role, the dangers of AI in mental health make it clear these tools cannot replace human care.

If you or someone you know is struggling with mental health or substance use issues, professional help is available. Contact a licensed mental health provider, call the 988 Suicide and Crisis Lifeline, or reach out to Sanative Recovery for comprehensive, human-centered care that addresses the real dangers of AI in mental health by providing genuine expertise and support.

About Sanative Recovery

Sanative Recovery is a substance use disorder treatment facility located in Blue Ash, Ohio. We provide compassionate, evidence-based care for individuals struggling with addiction. Our experienced team offers personalized treatment plans, individual and group therapy, medication-assisted treatment, and ongoing support to help clients achieve lasting recovery. Unlike AI mental health apps with significant dangers, we provide the human expertise and genuine connection essential for healing. For more information or to schedule a consultation, visit sanativeohio.com or contact us today.